Introduction

For a backend system, performance is critical to maintain a seamless user experience. One of the most effective ways to enhance performance is through data caching. Caching reduces the load on the data sources, decreases latency, and improves the responsiveness of an application. In this blog, we will explore various data caching strategies, their benefits, and how to implement them effectively.

What is Data Caching?

It is a process of storing copies of pre-fetched data in a temporary storage location so that future requests for that data can be served faster. Caching can be implemented at various levels, including client-side, server-side, and database-level.

Benefits of Data Caching

- Reduced Latency due to faster data retrieval

- Decreased number of database queries as the most frequent ones are cached

- Improved Scalability

- Faster page load times mean a smoother user experience.

Types of Caching Strategies

1. In-Memory Caching

In-memory caching is storing data in the RAM of a server for ultra-fast access.

Tools used for in-memory caching:

- Redis: An open-source, in-memory data structure store used as a database, cache, and message broker. Redis supports various data structures like strings, hashes, lists, sets, and sorted sets.

- Memcached: A general-purpose distributed memory caching system that stores data in key-value pairs. It is known for its simplicity and high performance.

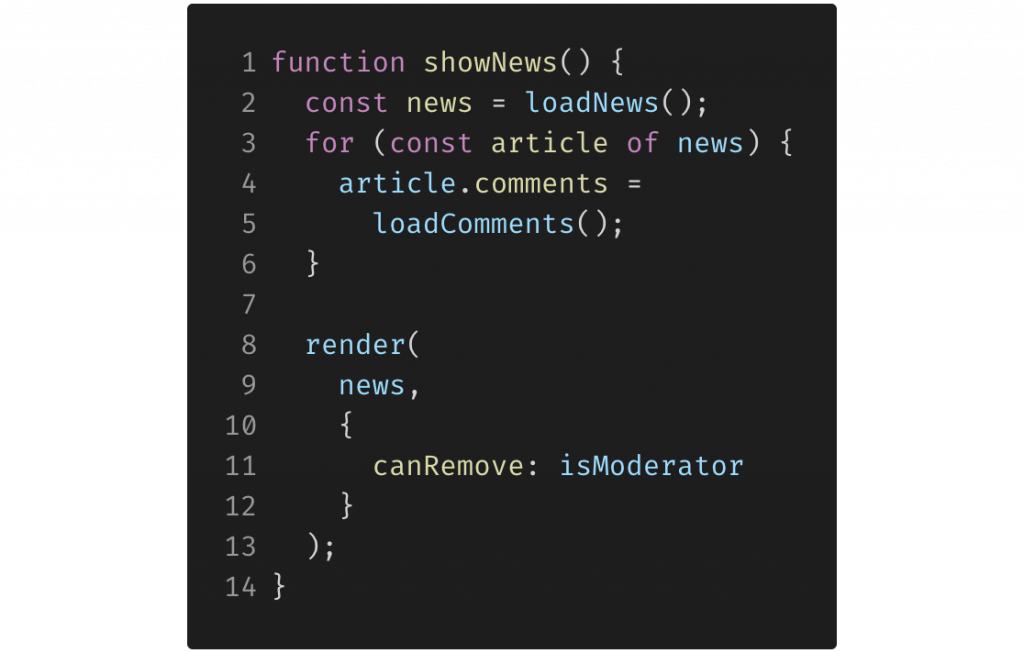

As an example, here’s how we can implement an in-memory caching in our express js application with Redis

// Create a Redis client

const redisClient = redis.createClient();

// Middleware to check cache

const cacheMiddleware = (req, res, next) => {

const { id } = req.params;

redisClient.get(id, (err, data) => {

if (err) throw err;

if (data !== null) {

res.send(JSON.parse(data));

} else {

next();

}

});

};

// Route with in-memory caching

app.get(‘/data/:id’, cacheMiddleware, (req, res) => {

const { id } = req.params;

const data = await FetchDataFromSource(id)

// Store the data in Redis with an expiration time of 3600 seconds (1 hour)

redisClient.setex(id, 3600, JSON.stringify(data));

res.send(data);

});

2. Distributed Caching

Definition: Distributed caching involves spreading the cache across multiple servers, providing a scalable solution for large-scale applications.

Distributed cache server ensures seamless caching as the system remains available even if some servers fail. Distributed caching adds more nodes to the cache cluster, which helps to accommodate the increase in traffic over time.

Tools used for distributed caching:

- Apache Ignite: A distributed database, caching, and processing platform designed to handle large-scale data sets across multiple servers.

Implementing a distributed caching system with Apache Ignite involves setting up a cluster of Ignite nodes, configuring caching settings, adding dependencies to the application, adding configs, and lastly connecting to the grid from the application.

- Hazelcast: An open-source in-memory alternative that provides distributed caching and processing.

Use Cases:

- Large-scale web applications where a single server’s cache is insufficient to handle the load.

- Applications requiring high availability and fault tolerance, as distributed caches can replicate data across nodes.

3. HTTP Caching

Definition: HTTP caching is a technique, mostly used by web browsers, to store responses to HTTP requests, reducing the need for repeated server requests.

It can be achieved using HTTP headers like Cache-Control, Last-Modified, and ETag.

Here’s an example of how a client-side application can implement HTTP caching:

The Cache-Control header specifies caching directives that control how and for how long the browser caches resources.

fetch(‘https://example.com/api/data’, {

headers: {

‘Cache-Control’: ‘max-age=3600’ // Cache the response for 1 hour

‘ETag’: ‘abc123’, // Unique identifier for the response

}

})

.then(response => {

// Handle response

});

Use Cases:

- Static websites where the content does not change frequently.

- Delivering static assets (images, CSS, JavaScript) to improve page load times.

4. Database Caching

Database caching includes keeping frequently visited data in a cache. By doing this, query execution time is reduced and overall application performance is enhanced.

A lot of database systems, such as MySQL, comes with built-in techniques for query caching. Query caching involves storing in memory the results of expensive and frequently run queries. The database doesn’t rerun the query when the same query is run again; instead, it receives the results from the cache.

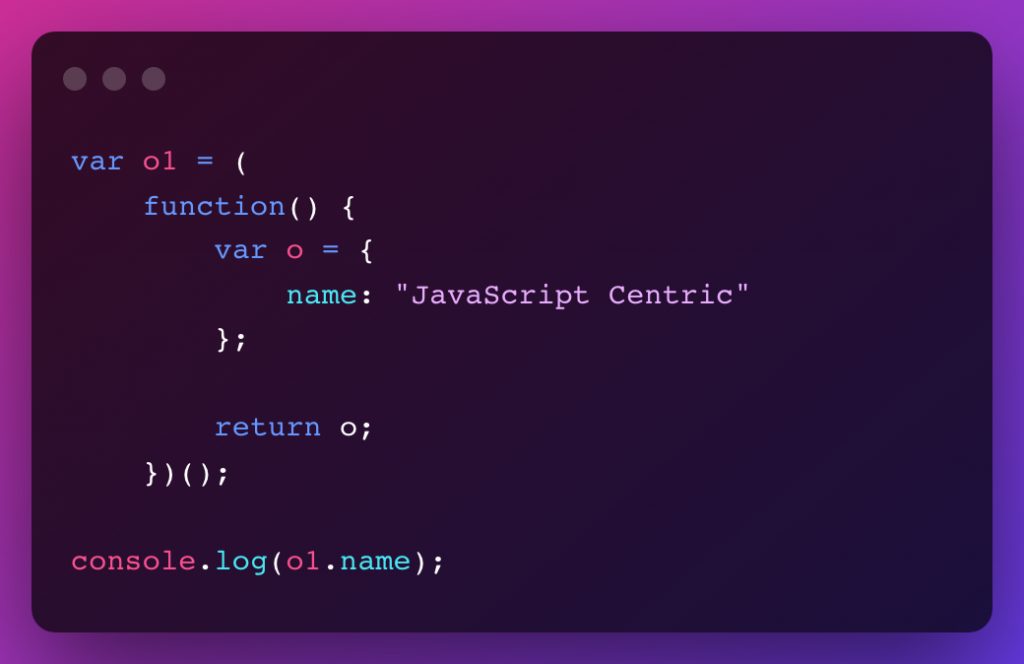

Here’s an example of caching query results using Redis in a node.js application:

const redis = require(‘redis’);

const client = redis.createClient();

function getCachedData(query) {

return new Promise((resolve, reject) => {

client.get(query, (err, data) => {

if (err) reject(err);

resolve(data);

});

});

}

function cacheData(query, data) {

client.set(query, data);

}

// Usage:

const query = ‘SELECT * FROM products WHERE category = “electronics”‘;

getCachedData(query)

.then((cachedData) => {

if (cachedData) {

console.log(‘Cached data:’, cachedData);

} else {

// Execute query and cache results

const data = executeQuery(query);

cacheData(query, data);

console.log(‘Query result:’, data);

}

})

.catch((err) => {

console.error(‘Error:’, err);

});

Use Cases:

- High-read applications where certain queries are executed frequently.

- Reducing database costs by offloading read-heavy traffic to a cache.

Best Practices for Implementing Caching

- Determine What to Cache: Not all data should be cached. Data with frequent updates shouldn’t be cached as it gives the danger of serving stale data.

- Set Appropriate TTL (Time-to-Live): Set an effective expiration time for cached data to keep the data fresh and relevant.

- Handle Cache Invalidation: Implement strategies to update or invalidate cache entries when the underlying data changes.

- Monitor and Analyze Cache Performance: Monitor cache hit:miss ratio to measure the effectiveness of caching

Conclusion

Caching is a powerful technique for improving the performance and scalability of backend systems. By understanding and implementing the right caching strategies, developers can significantly enhance their applications’ efficiency, reduce latency, and provide a better user experience.